Publications

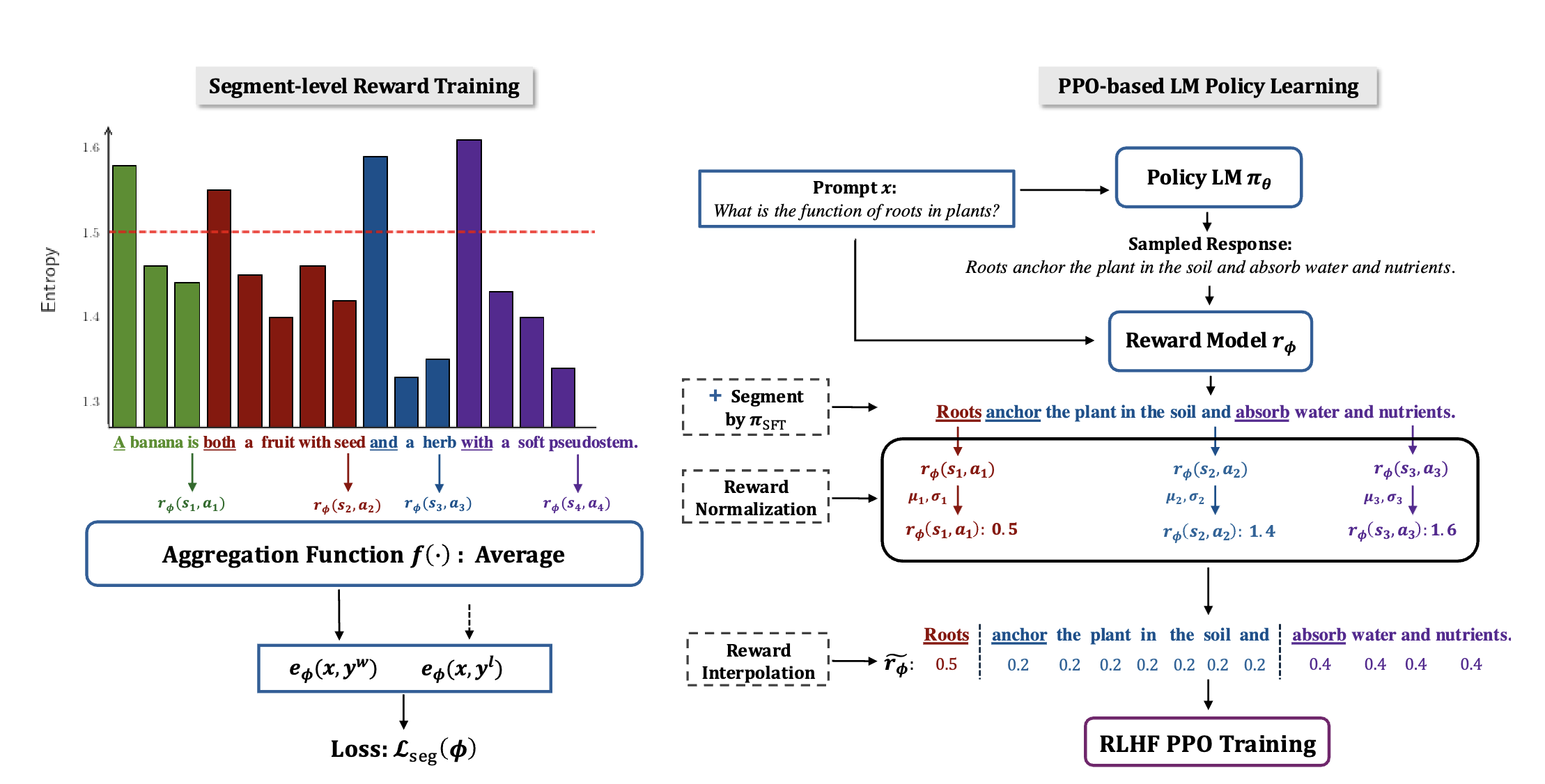

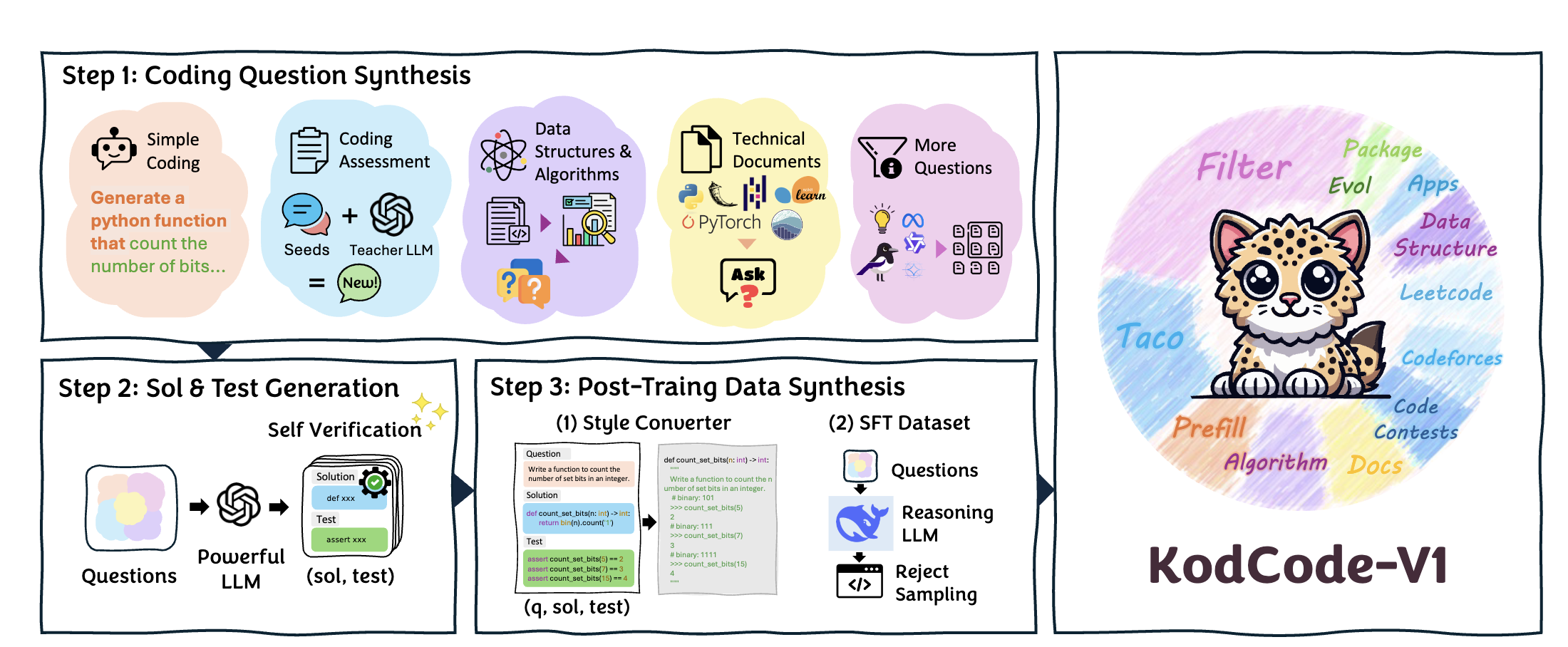

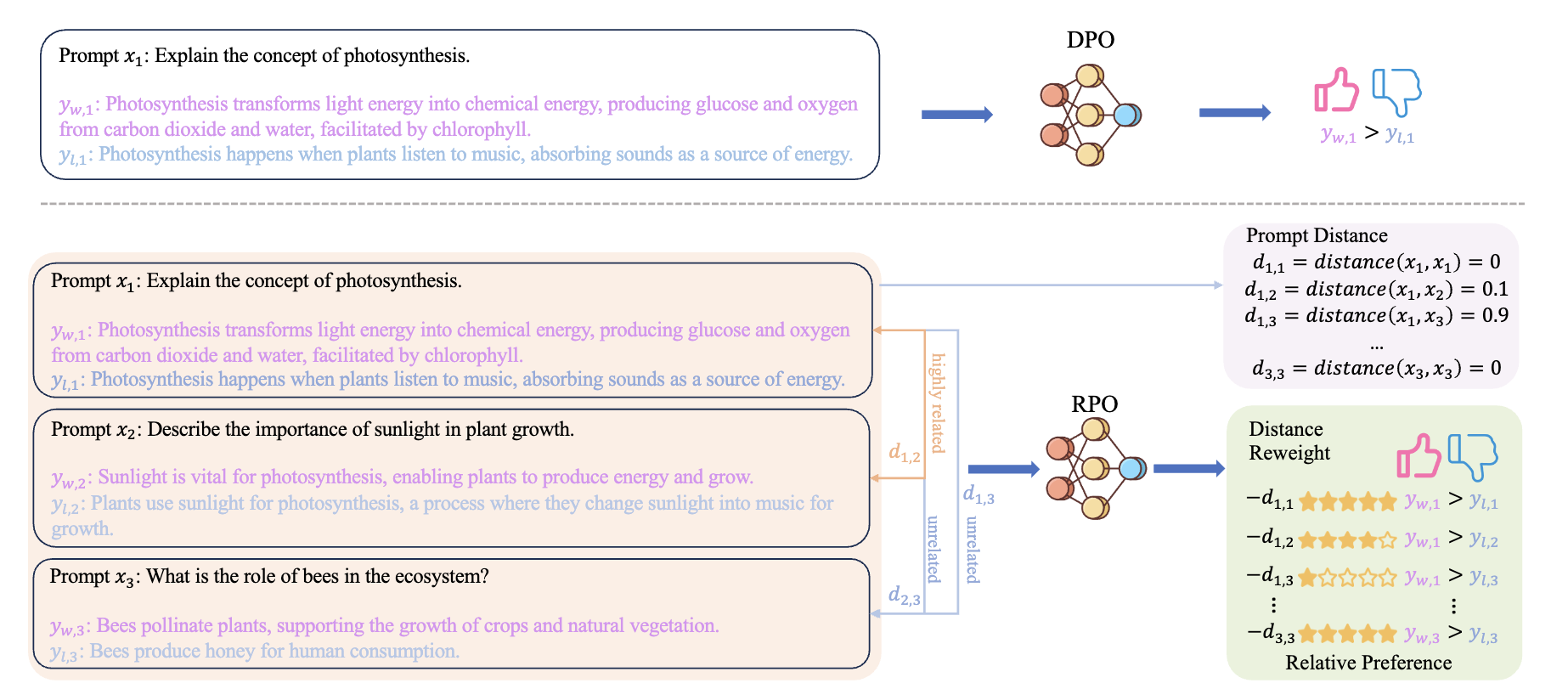

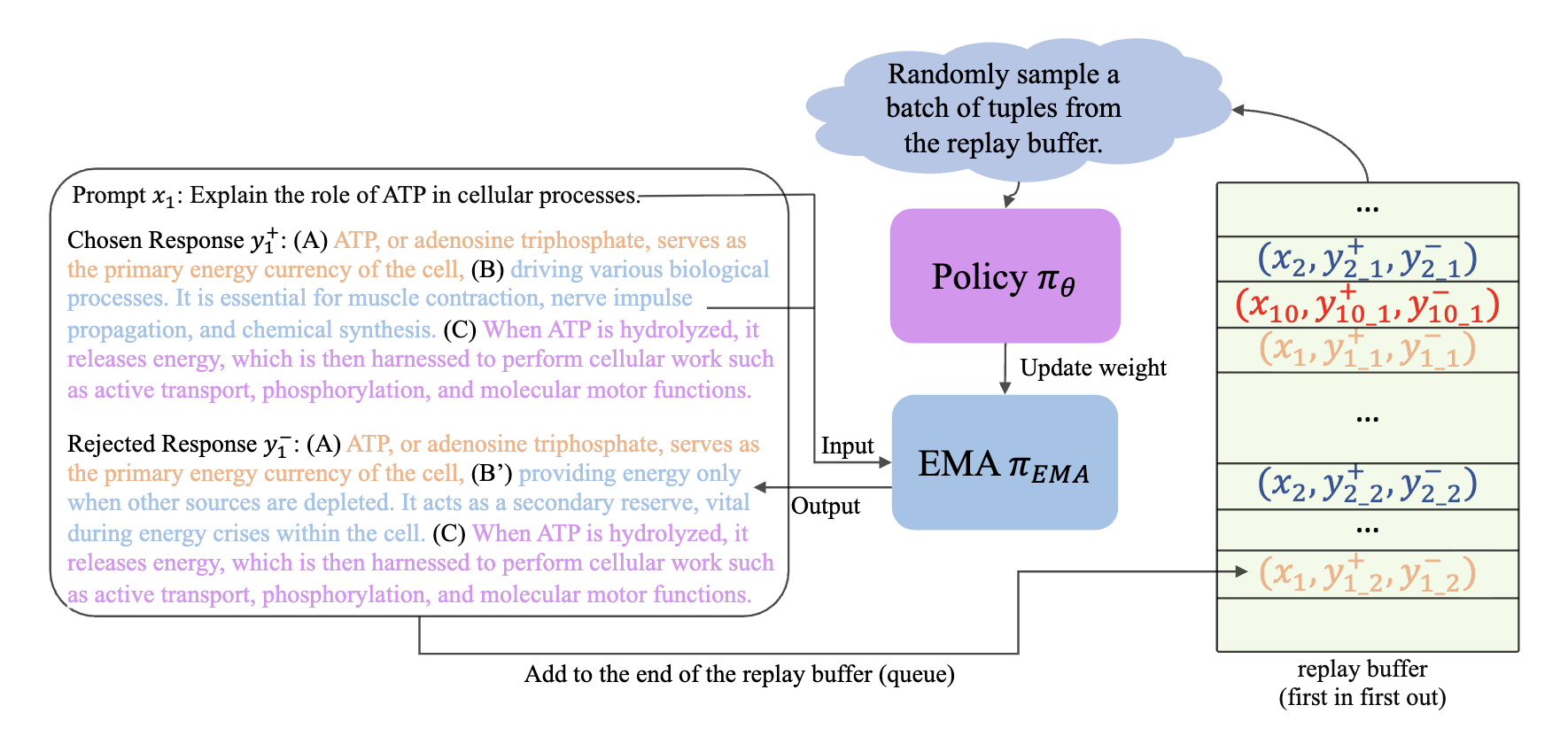

My research focuses on LLM alignment, reasoning, and post-training optimization. On the algorithmic side, I work on DPO-based optimization (RPO, SAPO, Diffusion-RPO) and PPO-based optimization (Segment-Level PPO) to improve model alignment and reasoning reliability. On the data side, I develop ContextCheck, a sentence-level faithfulness verifier designed for context-dependent hallucination detection, and also contribute to KODCODE, a large-scale and verifiable dataset for code generation model training.

2025

Under Review ContextCheck: Sentence-Level Faithfulness Verification with Context-Aware Disambiguation

Paper (Coming Soon) / Code (Coming Soon)

2024

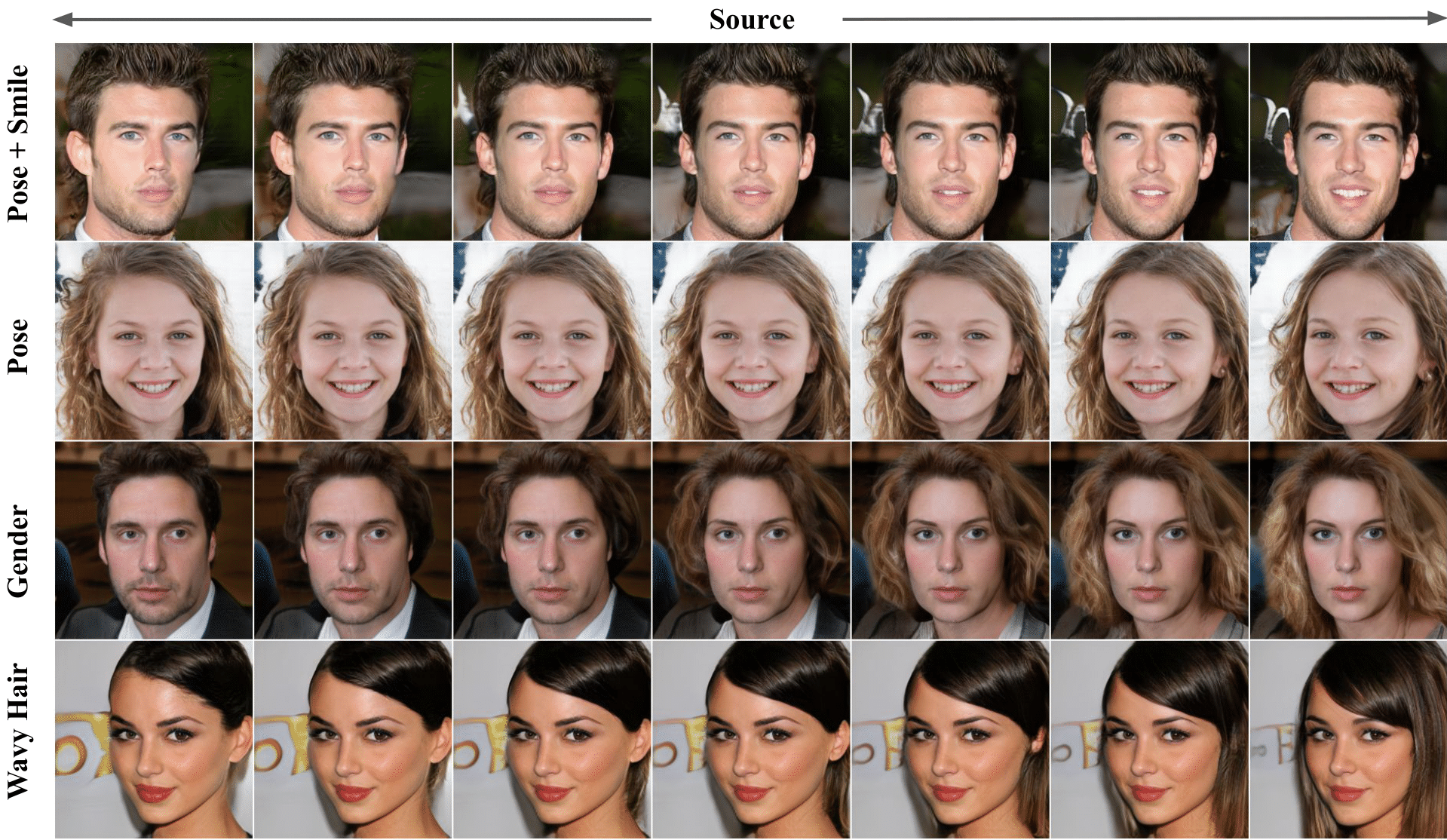

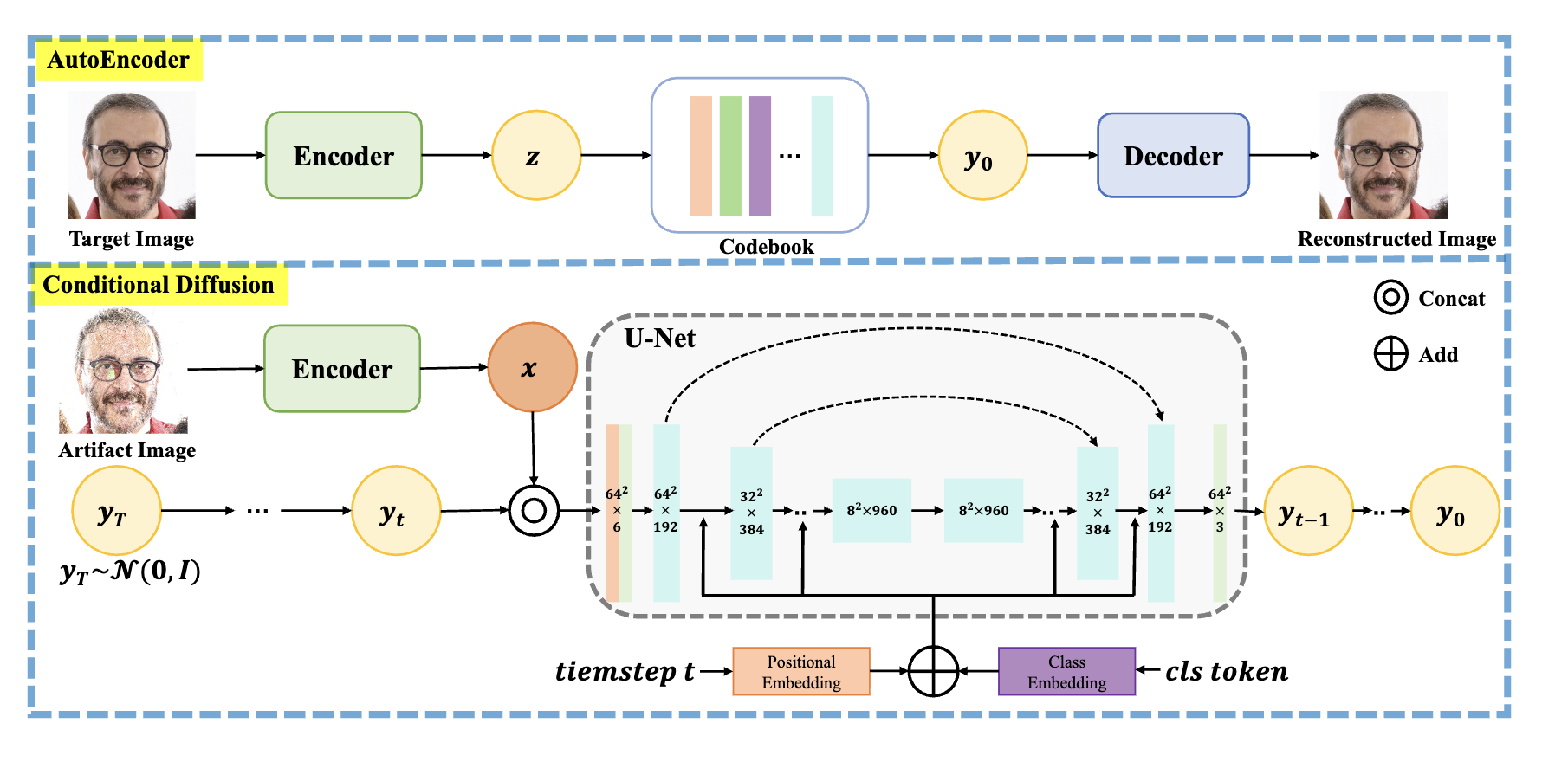

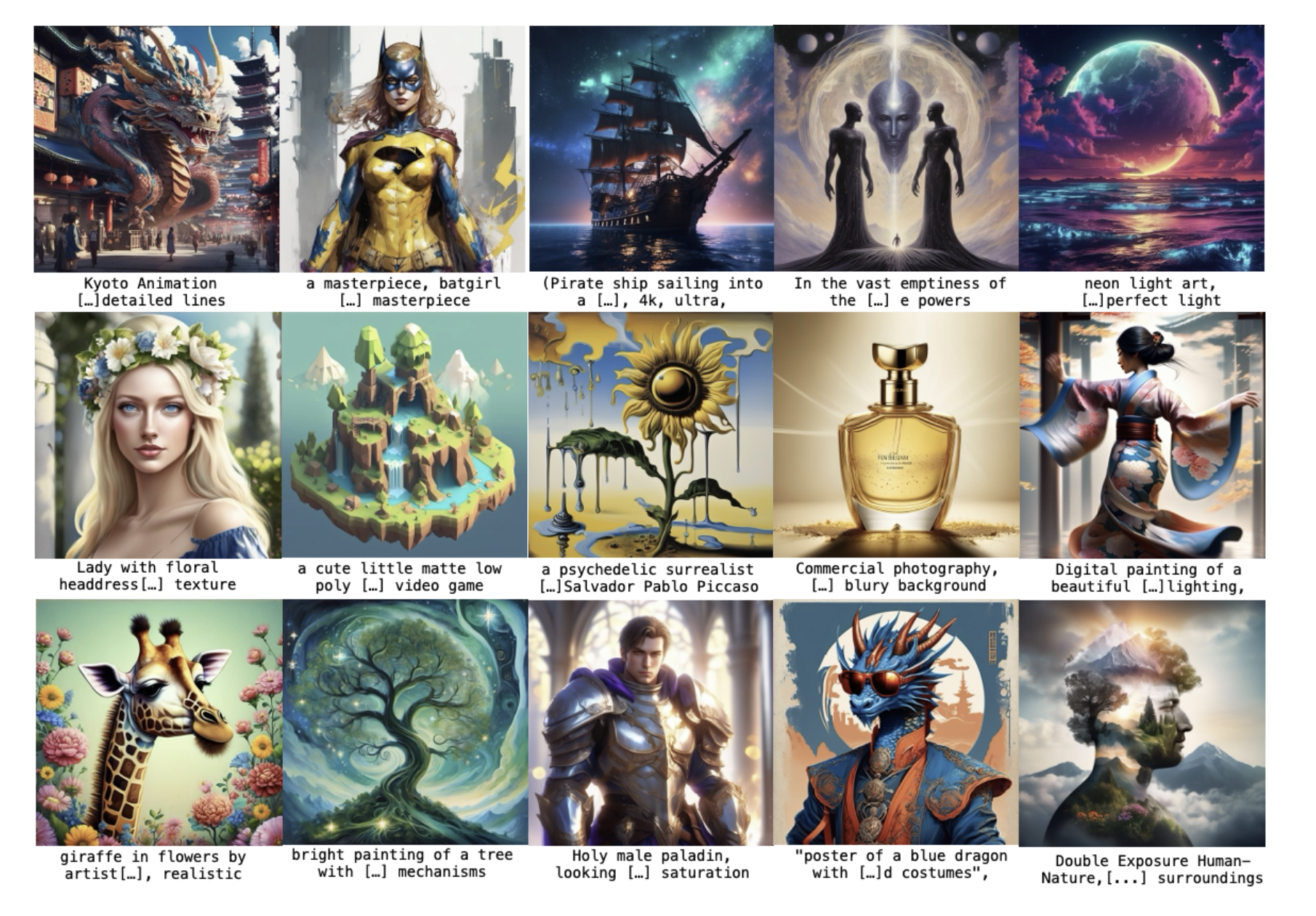

arXiv'2406 Diffusion-RPO: Aligning Diffusion Models through Relative Preference Optimization

2023

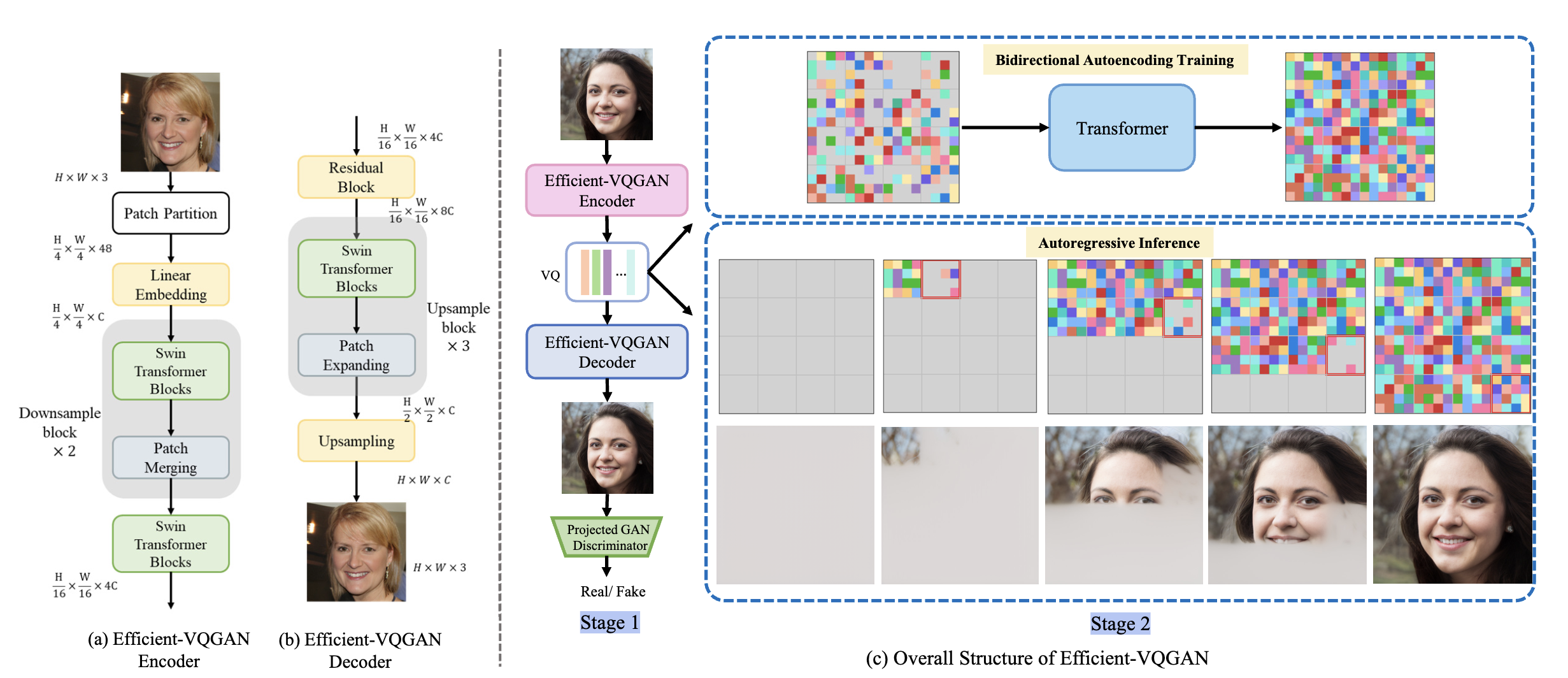

ICCV23 Efficient-VQGAN: Towards High-Resolution Image Generation with Efficient Vision Transformers